This is a difficult architecture to realise, but there are some firms which are pursuing this. In essence the LLM gives the end user facing application, intelligent and real time data and reactions. It can be good for systems which need to assess many documents or pages of information and collate a reply.

Frontend: Astro (SSG) + Tailwind; curated section components (Hero/Services/Testimonials/Contact).

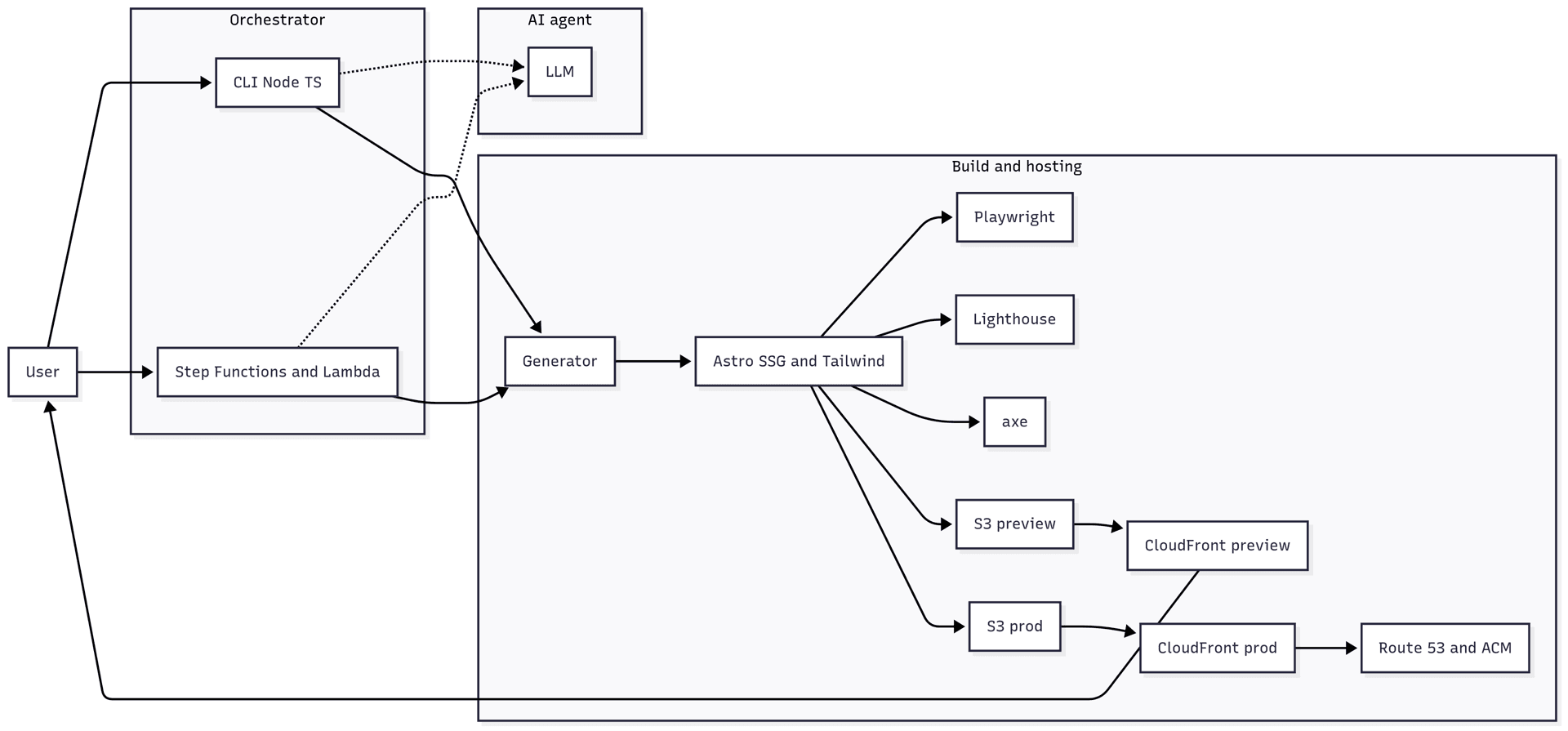

Orchestrator:

Option A: Node/TS CLI (site)

Option B: AWS Step Functions + Lambda (+ CodeBuild)

LLM: Amazon Bedrock (Claude/Nova/etc.) or OpenAI API (called from Lambda/CLI).

CI/CD: CodeCommit + CodeBuild (+ CodePipeline).

Hosting: S3 (static) + CloudFront (+ Route 53, ACM).

Secrets: AWS Secrets Manager or SSM.

User — person giving prompts and approving changes.

AI agent / LLM — generates site.json and content JSON (or JSON Patch) only.

Orchestrator / CLI (Node TS) — local runner that calls gen/build/tests/preview/deploy.

Orchestrator / Step Functions + Lambda — AWS workflow version of the orchestrator.

Generator — maps site.json to Astro components/templates (no freeform AI code).

Astro SSG + Tailwind — builds static pages with prebuilt Tailwind section templates.

Playwright — smoke tests (page renders, sections present, basic flows).

Lighthouse — performance/SEO/accessibility scores and budgets.

axe — automated accessibility (WCAG) checks.

S3 preview — bucket holding per-branch build artifacts.

CloudFront preview — CDN serving preview URLs.

S3 prod — production static site bucket.

CloudFront prod — CDN for the live site.

Route 53 + ACM — DNS and TLS certificate for the live domain.