CHALLENGES

1. Data Ingestion and Velocity:

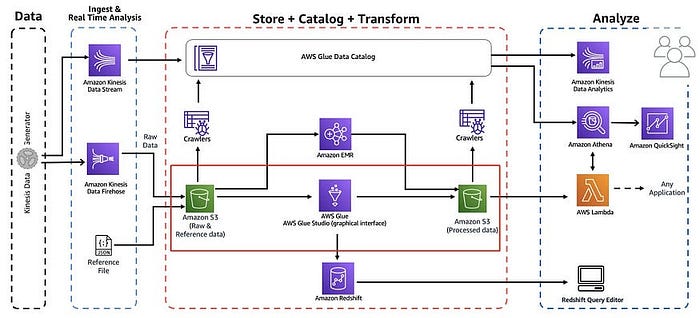

- Handling high-volume, high-velocity data: Ingesting and processing massive amounts of data arriving at high speeds requires robust and scalable solutions like Amazon Kinesis (Data Streams, Firehose, Analytics) or Amazon MSK (Managed Streaming for Apache Kafka).

- Ensuring data durability and reliability: Guaranteeing that no data is lost during ingestion, even during system failures, is crucial. This involves proper configuration of data buffering, replication, and persistence mechanisms.

2. Data Processing and Transformation:

- Real-time data processing: Performing transformations, aggregations, and enrichments on streaming data with low latency requires specialized stream processing frameworks like Apache Flink (using Amazon Kinesis Data Analytics for Apache Flink) or Spark Streaming.

- Schema evolution: Handling changes in data schema over time without disrupting the processing pipeline can be complex. AWS Glue Schema Registry can help manage schema evolution for Kafka.

3. Data Storage and Management:

- Optimizing storage for real-time and batch analytics: Balancing the need for low-latency access for real-time queries with cost-effective storage for large volumes of historical data. This often involves using a combination of services like Amazon S3, Amazon DynamoDB, and Amazon Redshift.

- Data partitioning and indexing: Efficiently partitioning and indexing data in the data lake to enable fast querying and analysis is essential. AWS Glue Data Catalog helps manage metadata and schema information.

4. Data Governance and Security:

- Ensuring data quality and consistency: Maintaining data quality and consistency across the data lake requires robust data validation and cleansing processes.

- Implementing security and access control: Securing sensitive data and implementing fine-grained access control policies is crucial. AWS Identity and Access Management (IAM) and other security services play a vital role.

5. Operational Complexity:

-

- Monitoring and managing the streaming pipeline: Monitoring the health and performance of the entire streaming pipeline, including ingestion, processing, and storage components, is crucial for ensuring reliability and performance. Amazon CloudWatch provides monitoring and logging capabilities.

- Cost optimization: Managing the costs associated with various AWS services used in the data lake requires careful planning and optimization

INDUSTRY

Finance